So I guess I'm doing something I shouldn't be. That's not unusual.

I have a pair of data-store servers that are mirrored with DRBD. Pacemaker is providing the cluster management, and for sharing up a cluster-acceptable file system I chose NFS because, well, I could. That and OCFS2 has just been too much of a headache lately and with a move to Proxmox, I think its days in my network are numbered. This NFS deployment was a trial, and thank goodness that it was!

I set up my Pacemaker along the lines of what you'll most commonly find in most tutorials, including those from LinBit themselves. Great stuff!

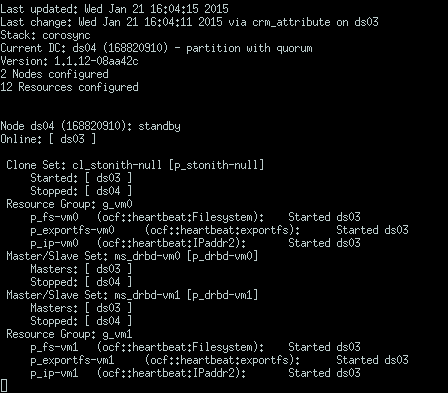

So, I had initially configured a single NFS export, vm0, and it worked well. It seemed to transition well from server to server, and I was happy. Then I set up a second NFS export, vm1. Lately I've been moving some of my inactive VM images over to it (I have a pair of Proxmox hypervisors running against this data store cluster). I was a little feeling dangerous and decided to put one of the nodes into standby.

And disaster struck.

I won't go into the gory details, but the problem itself and its solution are worth noting here. Firstly, the initial symptom was that the Proxmox NFS clients couldn't talk to the export that got migrated. In this case, it was vm0 migrating from ds03 to ds04. The reported error was that the NFS handle was stale. That didn't make much sense, because there shouldn't have been any handles left on ds04 for the vm0 share. However, rmtab doesn't really get cleaned up - unless you are using NFSv4 and TCP, and even then I'm only saying that because that's what preliminary research has turned up and I have yet to test it myself.

So, the rmtab on the two data servers were out of sync with each other. The documentation I've been able to find on setting up HA NFS only ever discusses having one share, or at least one server being the active server. Spreading the load across multiple servers via multiple NFS exports is novel, but this is the gotcha: if you don't have the rmtab sync'd up, you will pay the price when your export moves from one data server to another.

In the case of an active/passive cluster, this shouldn't be an issue: the only rmtab you have to worry about is the one on the active node. Make sure it's actively replicated to the passive node, and your handles will be fine. That doesn't work with active/active: both nodes are modifying their rmtabs at the same time.

There doesn't appear to be any clean, official way to clean up rmtab. One way could be to use sed to delete any entries that don't belong on the given server. So, in ds03's case, it shouldn't have any entries for vm1. That's easy enough, and we can even probably bash-script that and run it as a cron job every minute. However, I'm not sure how that will work out if both nodes don't have equivalent rmtabs... I get the feeling, from what I saw online, that not having any appropriate entries will yield a stale handle error. Example: we clean ds03's rmtab of all vm1 entries, then put ds04 into standby. vm1 must now be served by ds03. My guess is that this will result in stale handles (very bad).

As an alternative to just nuking unwanted entries, we can combine the rmtabs from the two nodes so that their respective handle records are valid between both machines. In this way, each one is active, and each one is passive to its peer. I am not sure yet how exactly I'd script this out, since both servers need to have the same rmtab at the end of the day, but both are modifying it. My first instinct would be to make a cron-driven script on one of the nodes that nabs the rmtab from the other node, cleans both the local and remote rmtabs, and then combines them and distributes them out. This would keep both nodes in sync. This might even work well, though maybe only where the rmtab doesn't change frequently and your NFS client membership is limited to a set of servers that are supposed to be online all the time.

Anyway, there you have it: beware the rmtab, it will be a small nightmare if you're not careful!!